Project Objective

Develop an AI-based system capable of detecting and classifying Asian hornets in video footage.

Minimize false positives by distinguishing hornets from bees.

Ensure fast and efficient processing on resource-limited devices.

Methodology

- Labeling and Data Preparation

Label Studio was used to annotate images extracted from videos.

The dataset focused on including a large number of hornets to maximize detection.

Bees were also present in the dataset to prevent false positives, but in a controlled quantity.

Generation of image/text pairs, indicating the position of bounding boxes and their corresponding classes.

- Detection Model

Utilization of the YOLOv10 Small model, ideal for environments with limited computational resources.

Fine-tuning on the labeled dataset to enhance accuracy.

Performance evaluation using precision, recall, and loss curves.

- Solution Structure

A single script handles the entire pipeline:

Extracting images from the video footage.

Formatting the images.

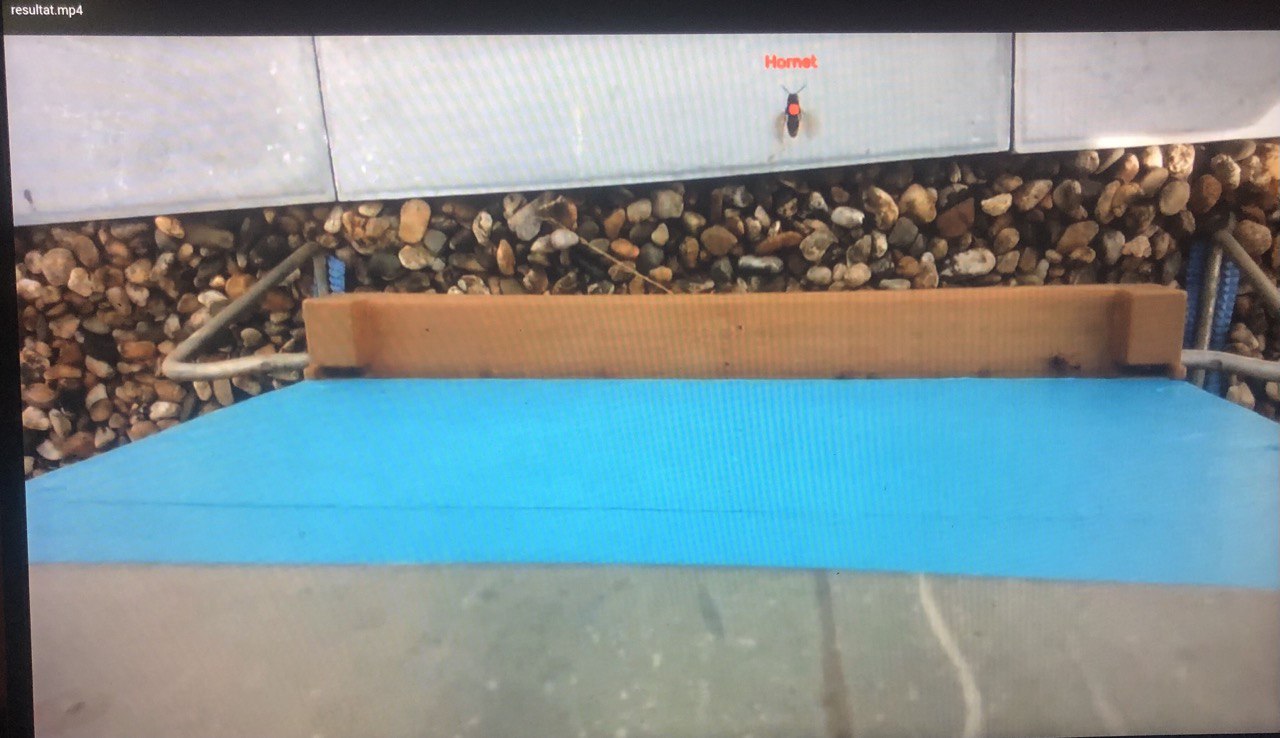

Predicting object classes with YOLOv10 (~40ms per image).

Generating and displaying results using OpenCV.

Results

Precision/Recall for Hornets:

Precision: 0.65

Recall: 0.92

Few false negatives, but some false positives.

Results align with the project’s objective.

Limitations and Biases

Test images were sourced from the same videos as the training set.

Risk of bias due to similar lighting conditions, colors, and backgrounds.

Future Improvements Enhancements to the Current Model:

Increase dataset size.

Experiment with different labeling strategies (e.g., labeling all bees in an image).

Potential Future Developments: Utilize the hovering behavior of hornets to refine detection. Combine object detection with advanced image processing techniques.